Once upon a time in the world of Machine Learning (yep, just like your 3rd grade stories - couldn't come up with something better), a group of very smart humans created something that seemed magical: Large Language Models (LLMs). These models, like ChatGPT, could write essays, generate code, and even try their hand at humor. But as anyone who's ever tried to follow a YouTube DIY video knows, things aren't always as easy as they look. Especially when it comes to math.

Chapter 1: Meet GSM8K—The Grade School Math Test

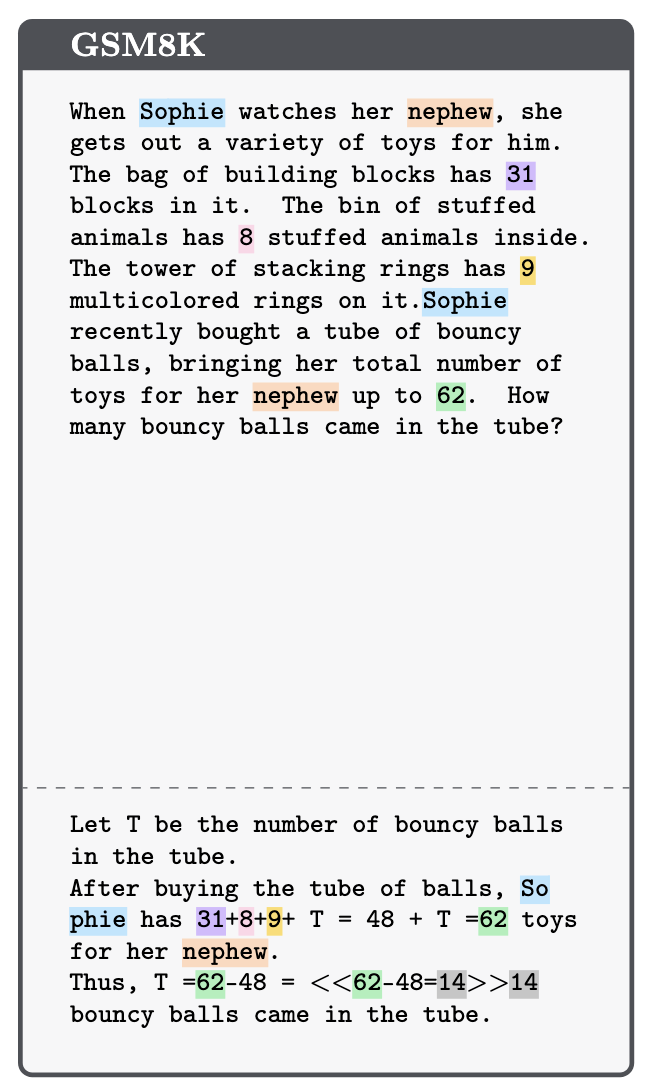

Picture this: you're sitting in class, and your teacher hands out a test full of fifth-grade math problems. Now imagine you're an AI model, taking that same test. That's GSM8K, a dataset with 8,000 grade-school math problems designed to see if these LLMs can think like humans. Spoiler alert: they can't. But why?

It turns out, LLMs are like that one kid who memorizes the answers to last year's test instead of learning the material. They might crush a question they've seen before, but tweak it a little, and they're lost. Turns out, pattern matching isn't the same as problem-solving. Who would have thought, right?

Chapter 2: Enter GSM-Symbolic, The Tougher Test

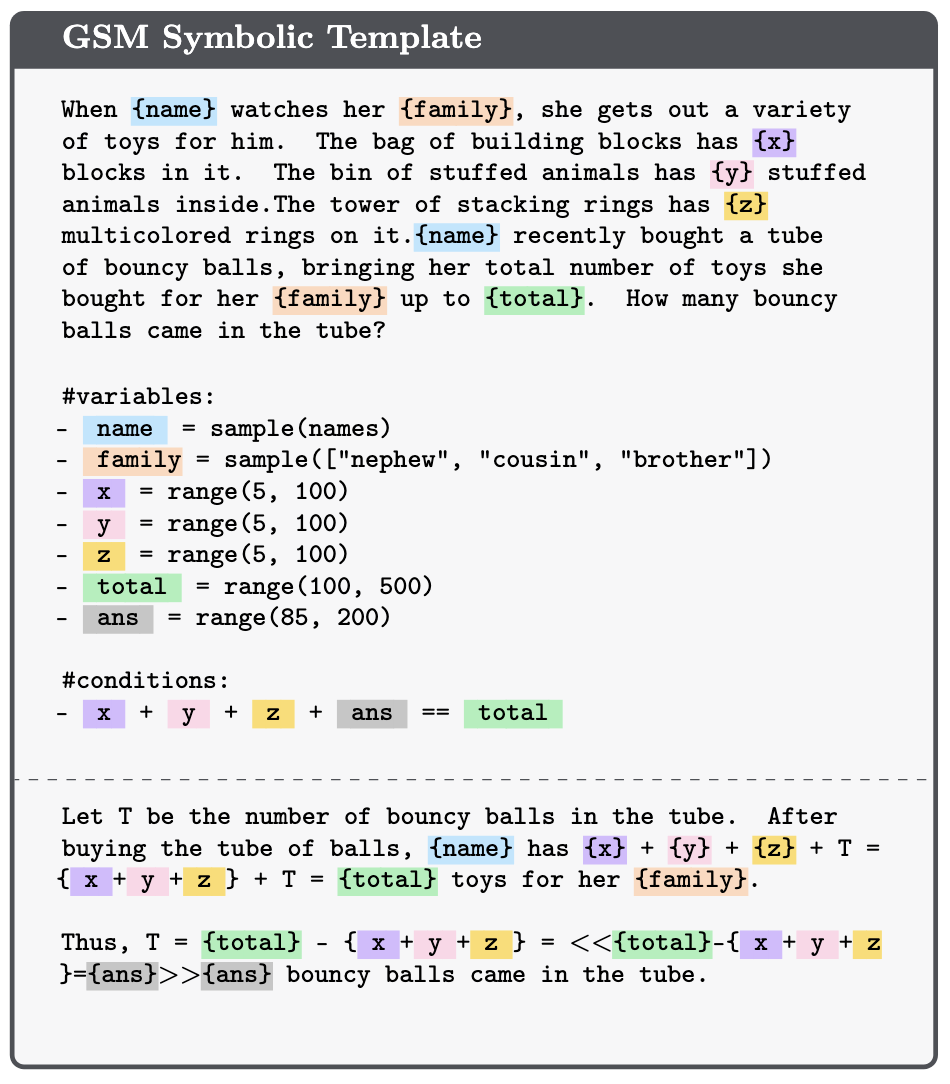

The researchers behind this study weren't convinced GSM8K was challenging enough. So, they created GSM-Symbolic. Think of it like asking someone to solve "2 + 2" and then switching it up: "If you have 2 apples and want 4 fruits total, how many bananas do you need?" Same math, but now there's a story involved.

GSM-Symbolic generates variations of questions by swapping numbers or rephrasing them. The results? Even small changes made the models stumble. It's like asking someone to do a cartwheel on a slightly different patch of grass and watching them faceplant.

Chapter 3: The Fragility of AI Math Skills

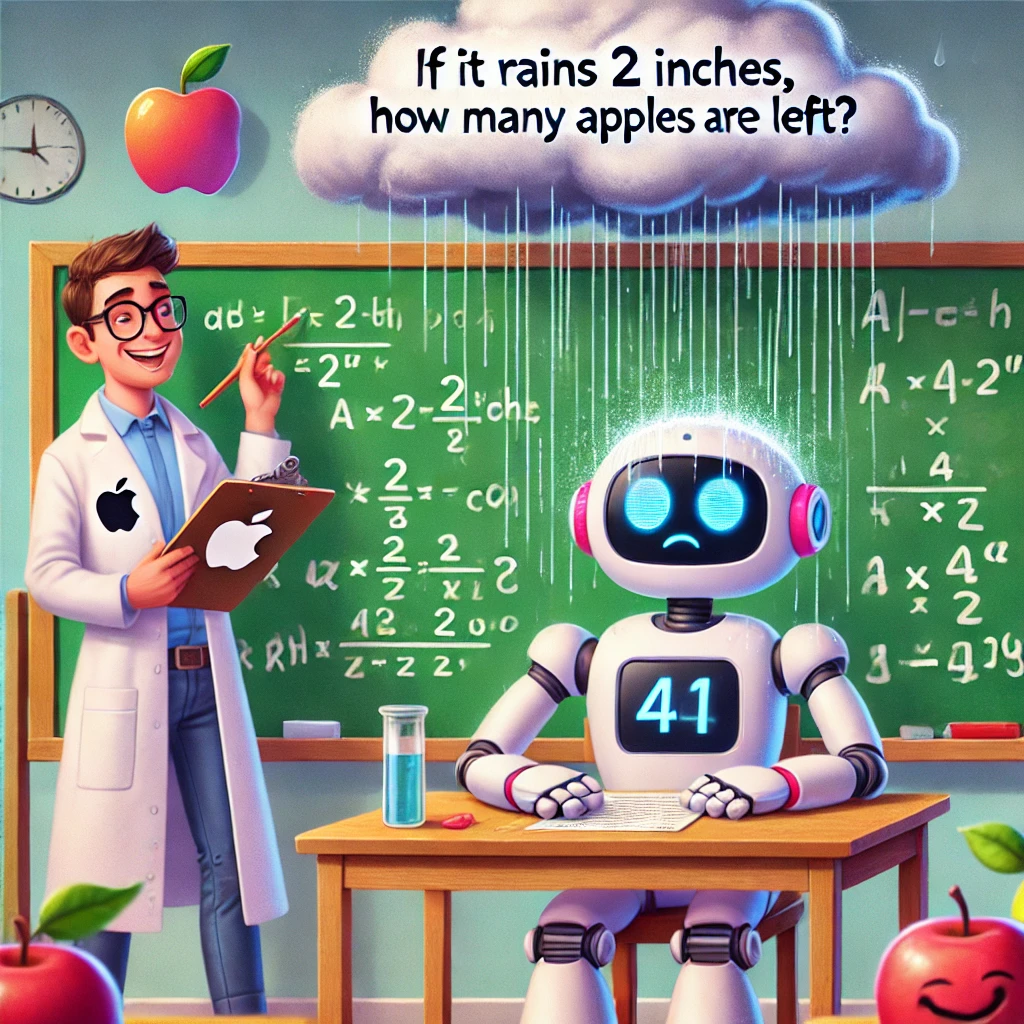

Things got worse when problems became more complex. Imagine a question with extra details like, "By the way, it was raining that day." Humans would ignore the irrelevant bit, but LLMs acted like the rain was part of the math and got completely thrown off.

In one test, researchers added a random, unrelated clause to questions, and the models' performance dropped by as much as 65%. That's like forgetting how to count just because someone mentioned it's sunny outside.

Chapter 4: Lessons from GSM-NoOp

Next up: GSM-NoOp. This test sprinkled irrelevant but "important-sounding" info into math problems to see if models could ignore it. Spoiler: they couldn't.

For example:

"Oliver picks 44 kiwis on Friday, 58 on Saturday, and double the Friday count on Sunday. Five kiwis were smaller than average. How many kiwis does Oliver have?"

The models subtracted the 5 smaller kiwis (why though?) and got the answer wrong. A human would laugh at the absurdity and move on. The models? They panicked.

Chapter 5: The Takeaway

So, what does this all mean? LLMs aren't math geniuses; they mimic patterns. They're great at writing essays or generating code but fall apart when real logical reasoning is needed.

The researchers showed us that while LLMs are impressive, they're far from perfect. To make them truly capable of understanding, we need smarter training methods and better benchmarks—and maybe teach them not to freak out over imaginary rain.

Epilogue: The Future of AI Reasoning

As we wrap this up, remember: AI models are still learning. Just like you wouldn't expect a toddler to do calculus, we can't expect today's LLMs to ace complex reasoning. But with curated datasets like GSM-Symbolic and GSM-NoOp and various other tools, researchers are pushing the boundaries. Who knows? Maybe one day, they'll solve math problems without breaking a sweat.

Until then, we'll keep teaching them, one symbolic template at a time. And maybe, just maybe, they'll stop panicking about small kiwis and rain.

💡Note: For those interested in diving deeper into the technical details and implementation, I recommend checking out the original article here.